To great fanfare, Oxford Dictionaries chose "post-truth" as its international word of the year.

The use of “post-truth” — defined as “relating to or denoting circumstances in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief” — increased by 2,000 percent over last year, according to analysis of the Oxford English Corpus, which collects roughly 150 million words of spoken and written English from various sources each month. New York TimesI introduce this into a science blog because, well, I see some parallels with science. As most of us know, Thomas Kuhn, in his iconic book, The Structure of Scientific Revolutions, wrote about "normal science", how scientists go about their work on a daily basis, theorizing, experimenting, and synthesizing based on a paradigm, a world view that is agreed upon by the majority of scientists. (Although not well recognized, Kuhn was preceded in this by Ludwik Fleck, Polish and Israeli physician and biologist who, way back in the 1930s, used the term 'thought collective' for the same basic idea.)

When thoughtful observers recognize that an unwieldy number of facts no longer fit the prevailing paradigm, and develop a new synthesis of current knowledge, a 'scientific revolution' occurs and matures into a new normal science. In the 5th post in Ken's recent thought-provoking series on genetics as metaphysics, he reminded us of some major 'paradigm shifts' in the history of science -- plate tectonics, relativity and the theory of evolution itself.

We have learned a lot in the last century, but there are 'facts' that don't fit into the prevailing gene-centered, enumerative, reductive approach to understanding prediction and causation, our current paradigm. If you've read the MT for a while, you know that this is an idea we've often kicked around. In 2013 Ken made a list of 'strange facts' in a post he called "Are we there yet or do strange things about life require new thinking?" I repost that list below because I think it's worth considering again the kinds of facts that should challenge our current paradigm.

As scientists, our world view is supposed to be based on truth. We know that climate change is happening, that it's automation not immigration that's threatening jobs in the US, that fossil fuels are in many places now more costly than wind or solar. But by and large, we know these things not because we personally do research into them all -- we can't -- but because we believe the scientists who do carry out the research and who tell us what they find. In that sense, our world views are faith-based. Scientists are human, and have vested interests and personal world views, and seek credit, and so on, but generally they are trustworthy about reporting facts and the nature of actual evidence, even if they advocate their preferred interpretation of the facts, and even if scientists, like anyone else, do their best to support their views and even their biases.

Closer to home, as geneticists, our world view is also faith-based in that we interpret our observations based on a theory or paradigm that we can't possibly test every time we invoke it, but that we simply accept. The current 'normal' biology is couched in the evolutionary paradigm often based on ideas of strongly specific natural selection, and genetics in the primacy of the gene.

The US Congress just passed a massive bill in support of normal science, the "21st Century Cures Act", with funding for the blatant marketing ploys of the brain connectome project, the push for "Precision Medicine" (first "Personalized Medicine, this endeavor has been, rebranded -- cynically? --yet again to "All of Us") and the new war on cancer. These projects are nothing if not born of our current paradigm in the life sciences; reductive enumeration of causation and the ability to predict disease. But the many well-known challenges to this paradigm lead us to predict that, like the Human Genome Project which among other things was supposed to lead to the cure of all disease by 2020, these endeavors can't fulfill their promise.

To a great if not even fundamental extent, this branding is about securing societal resources, for projects too big and costly to kill, in a way similar to any advertising or even to the way churches promise heaven when they pass the plate. But it relies on wide-spread acceptance of contemporary 'normal science', despite the unwieldy number of well-known, misfitting facts. Even science is now perilously close to 'post-truth' science. This sort of dissembling is deeply built into our culture at present.

We've got brilliant scientists doing excellent work, turning out interesting results every day, and brilliant science journalists who describe and publicize their new findings. But it's almost all done within, and accepting, the working paradigm. Too few scientists, and even fewer writers who communicate their science, are challenging that paradigm and pushing our understanding forward. Scientists, insecure and scrambling not just for insight but for their very jobs, are pressed explicitly or implicitly to toe the current party line. In a very real sense, we're becoming more dedicated to faith-based science than we are to truth.

Neither Ken nor I are certain that a new paradigm is necessary, or that it's right around the corner. How could we know? But, there are enough 'strange facts', that don't fit the current paradigm centered around genes as discrete, independent causal units, that we think it's worth thinking about whether a new synthesis, that can incorporate these facts, might be necessary. It's possible, as we've often said, that we already know everything we need to know: that biology is complex, genetics is interactive not iterative, every genome is unique and interacts with unique individual histories of exposures to environmental risk factors, evolution generates difference rather than replicability, and we will never be able to predict complex disease 'precisely'.

But it's also possible that there are new ways to think about what we know, beyond statistics and population-based observations, to better understand causation. There are many facts that don't fit the current paradigm, and more smart scientists should be thinking about this as they carry on with their normal science.

---------------------------------

Do strange things about life require new concepts?

1. The linear view of genetic causation (cis effects of gene function, for the cognoscenti) is clearly inaccurate. Gene regulation and usage are largely, if not mainly, not just local to a given chromosome region (they are trans);

2. Chromosomal usage is 4-dimensional within the nucleus, not even 3-dimensional, because arrangements are changing with circumstances, that is, with time;

3. There is a large amount of inter-genic and inter-chromosomal communication leading to selective expression and non-expression at individual locations and across the genome (e.g., monoallelic expression). Thousands of local areas of chromosomes wrap and unwrap dynamically depending on species, cell type, environmental conditions, and the state of other parts of the genome at a given time;

4. There is all sorts of post-transcription modification (e.g., RNA editing, chaperoning) that is a further part of 4-D causation;

5. There is environmental feedback in terms of gene usage, some of which is inherited (epigenetic marking) that can be inherited and borders on being 'lamarckian';

6. There are dynamic symbioses as a fundamental and pervasive rather than just incidental and occasional part of life (e.g., microbes in humans);

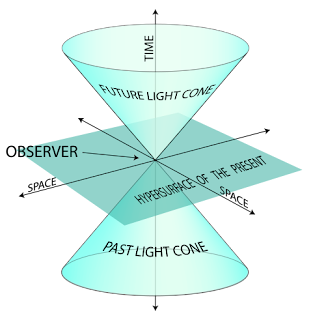

7. There is no such thing as 'the' human genome from which deviations are measured. Likewise, there is no evolution of 'the' human and chimpanzee genome from 'the' genome of a common ancestor. Instead, perhaps conceptually like event cones in physics, where the speed of light constrains what has happened or can happen, there are descent cones of genomic variation descending from individual sequences--time-dependent spreading of variation, with time-dependent limitations. They intertwine among individuals though each individual's is unique. There is a past cone leading of ancestry to each current instance of a genome sequence, from an ever-widening set of ancestors (as one goes back in time) and a future cone of descendants and their variation that's affected by mutations. There are descent cones in the genomes among organisms, and among organisms in a species, and between species. This is of course just a heuristic, not an attempt at a literal simile or to steal ideas from physics!

|

| Light cone: Wikipedia |

8. Descent cones exist among the cells and tissues within each organism, because of somatic mutation, but the metaphor breaks down because they have strange singular rather than complex ancestry because in individuals the go back to a point, a single fertilized egg, and of individuals to life's Big Bang;

9. For the previous reasons, all genomes represent 'point' variations (instances) around a non-existent core that we conceptually refer to as 'species' or 'organs', etc.('the' human genome, 'the' giraffe, etc.);

10. Enumerating causation by statistical sampling methods is often impossible (literally) because rare variants don't have enough copies to generate 'significance', significance criteria are subjective, and/or because many variants have effects too small to generate significance;

11. Natural selection, that generates current variation along with chance (drift) is usually so weak that it cannot be demonstrated, often in principle, for similar statistical reasons: if cause of a trait is too weak to show, cause of fitness is too weak to show; there is not just one way to be 'adapted'.

12. Alleles and genotypes have effects that are inherently relativistic. They depend upon context, and each organism's context is different;

13. Perhaps analogously with the ideal gas law and its like, phenotypes seem to have coherence. We each have a height or blood pressure, despite all the variation noted above. In populations of people, or organs, we find ordinary (e.g., 'bell-shaped') distributions, that may be the result of a 'law' of large numbers: just as human genomes are variation around a 'platonic' core, so blood pressure is the net result of individual action of many cells. And biological traits are typically always changing;

14. 'Environment' (itself a vague catch-all term) has very unclear effects on traits. Genomic-based risks are retrospectively assessed but future environments cannot, in principle, be known, so that genomic-based prediction is an illusion of unclear precision;

15. The typical picture is of many-to-many genomic (and other) causation for which many causes can lead to the same result (polygenic equivalence), and many results can be due to the same cause (pleiotropy);

16. Our reductionist models, even those that deal with networks, badly under-include interactions and complementarity. We are prisoners of single-cause thinking, which is only reinforced by strongly adaptationist Darwinism that, to this day, makes us think deterministically and in terms of competition, even though life is manifestly a phenomenon of molecular cooperation (interaction). We have no theory for the form of these interactions (simple multiplicative? geometric?).

17. In a sense all molecular reactions are about entropy, energy, and interaction among different molecules or whatever. But while ordinary nonliving molecular reactions converge on some result, life is generally about increasing difference, because life is an evolutionary phenomenon.

18. DNA is itself a quasi-random, inert sequence. Its properties come entirely from spatial, temporal, combinatorial ('Boolean'-like) relationships. This context works only because of what else is in (and on the immediate outside) of the cell at the given time, a regress back to the origin of life.

5 comments:

Hello Anne,

Could you expand on two of your endnotes?

#10 - "enumerating causation by statistical sampling is often impossible because rare variants ..." Are you referring to the fallibilism of statistical (or any ) induction? You, the scientist, get to set the sampling size and criteria and as with any instrument the capabilities are finite.

#16 - "Our reductionist models, even those that deal with networks, badly under-include interactions and complementarity. We are prisoners of single-cause thinking ..." I am not that familiar with biological models, but epidemiological and marketing networks are quite capable of representing multiple component (INUS) causation (e.g. Rothman's causal pies/sufficient components and Pearl's networks). Complimentarity (as I understand it) is also included, where a single "causand" can both inhibit and disinhibit an outcome, depending on context in it incurs. If you require "statistical significance" to include an arc, your ability to detect interactions will be limited by your sample.

In practice, I personally had no problem ordering nodes based on theoretical or other considerations. The proof of a network was in its (out-of-sample) predictions.

Thanks

Bill, thanks for your comment. I'll answer briefly, but I think Ken is going to weigh in as well, it being his list.

The problem in #10 is less about the problem with induction than about the problem of using statistical methods that depend on a large number of occurrences of a risk factor to determine its role in causation. Too rare and it's either not going to occur in your sample, or it won't reach statistical significance, even if it's 100% the cause in one or a few families.

I'm not quite sure of the point you're making with respect to #16. That it's possible to build statistical models that take multiple causes into account? The problem with that is that these kinds of models are built on the assumption that every case is the result of the same set of interacting causes, and we know in genetics that this isn't true. There are often many pathways to the 'same' phenotype.

But, again, Ken can better clarify his points.

I'll basically second Anne's reply. Statistical sampling in an evolutionary context leads to analysis that violates--to generally an unknown or unknowable extent--various premises that relate to interpretation, such as about error distributions, additivity and so on. And, as Anne says, the complex of factors is not the same, or are their relative proportions even among similar elements. Large or overwhelming numbers of causal contributors, even assuming the truth of an essentially genetic causal model (i.e., forgetting about environmental factors, somatic mutation, and so on), are too weak or rare individually to be included or to generate statistical significance. That is why multiple-causal models, while almost certainly true in principle, are problematic. The causes are not a fixed enumerable set, and it is their interaction (often too subtle to show up in additive models) that may be key to their net effect.

To me, as a rather 'philosophical' point, we do not have a very useful, much less precise, causal theory in most cases, for the statistical approach usually taken to be as apt as it's treated to be. We make what I call 'internal' comparison--like between obese and normal, case and control, and so on--and test for 'difference', rather than testing for fit to a serious-level causal theory. That is, our statistics are not just about measurement and sampling errors, which may follow reasonable distributional assumptions. If there is some underlying theory we don't know how to separate that from the measurement sorts of issues. And if the theory is that, based on the fact that the essence of evolution is about the dynamics of difference, rather than the repetition of similarity, then to me statistical models are just off the mark. For strong causation, it doesn't always matter, but this may not be so for weak causation, we have little way of knowing when, whether, why or how much it matters. And even for strong causative factors things are problematic. This is the difference between physics and biology, in a sense, in my view.

I am far from any sort of expert in the modeling things you mention (though I think my comments are pertinent relative to Pearl's ideas about causation, and we might have to debate how effective things are even in the epidemiological context. The latter would depend on the objectives, which often don't require individual risk or formal understanding of causation etc. and which work effectively when causation is rather singular and strong (e.g., a particular virus; smoking; airborne lead or asbestos).

Or, perhaps, I have failed to understand your comment!

Given the differences in our background, it is quite possible that I was not clear.

Standard statistical sampling is “wholesale” and is not designed to explain singular/rare events. Case-based sampling (e.g. case-control), however, is useful for studying the causes of rare outcomes. In my very limited experience using proteomics the point was to generate candidates for interventions or other amelioratory measures. The biologists and engineers took over from there. Since we were looking for population level effects, rare events weren’t as interesting.

Premises are just that, premises, and can be changed at will. Many of "standard" introductory

statistical methods were chosen for mathematical tractability, not necessarily because they are required or appropriate for a specific application. (Fisher himself noted that) Distributional approximations often fall into that class. They can be useful but are not required. Modern computing makes that less of a burden.

As you note, significant difference tests are not particularly conducive to model (theory) building, but they are not supposed to be. The push for additivity (e.g in Two-way Anova and Randomized Complete Blocks) is a hunt for regularities (“significant sameness”). Part of statistical modeling involves choosing transformations or changing the measurement to get additivity. Effects that aren’t separable on some metric scale can be quite separable and regular on an ordinal scale (This happens in marketing when you switch from, say, 7 point liking scales to discrete choice scales. This is a classic example where the attempt to measure non-numeric states confounds the model. )

Statistical tests focus on the deductive part of the process. You find out where the theory doesn’t fit the data and get more data or generate new hypotheses. Repeatable variation/differences is the target. “Statistical significance” for one-off studies is only part of that.

My experience suggests Pearl's methods are quite useful for predicting outcomes and for hypothesis generation in a marketing/product development context, and appear to be quite useful in epidemiology, too. They are built upon non-additivity and non-parametric models. Pearl is quite explicit that statistics is not meant for causal inference. A reference to Rothman’s and Pearl’s work in evolutionary biology is in https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4063485/

WRT to Ann’e comment, multiple causal pathways in epidemiology are also known as Competing Risks when the outcome is death/failure. Different potential causands can be on each path, as in Bernoulli's smallpox model. In product design, you have bundles of benefits, and the trick is to balance the benefits to beat the competitions’ bundles. My original training and interest in predator-prey and competition models transferred quite nicely to epidemiology and from there to marketing models.

To your closing comment, I think we both agree that statistical tools are not about individual level effects. They are quite good at picking up smaller pervasive effects.

This will take a short route to what deserves a long answer. I can't respond to some issues you raise, because I am not a formal statistician My point is not a complaint about statistics, but about the use when the assumptions do not fit, or not to a known or even knowable degree. We are investing heavily in strong promises and expensive but very weak mega-projects, in my view. Of course, strong patterns can be found, and even under those conditions, truly useful causative agents. But in general with all-purpose mega-studies it is less clear about whether, especially with weak causation which is by far the rule, that means causation or correlation which is relevant because it relates to whether retrospective fitting implies predictive power, which is what is being promised.

Competing risks happen to be something I have worked with, off and on, for decades. It is as you say, but that is a double edged sword because the assumptions underlying retrospective fitting (again) don't allow automatic projection into the future. And there is a plethora of outcomes involved, and nearly countless alternative pathways to similar outcomes. Secular trends in causes of death and disease make that point very clearly, in my view, because while they (may) allow retrospective fitting, and may allow crude (to some, usually unknowable extent) prediction, they do not automatically allow clear or known or often not even knowable personal prediction. Also, as to your final point, pervasive is a key word if in using it you essentially mean cause (of the 'pervasive effects') that is always present. But in genetics and evolutionary biology this is not always the case (if it were, in a sense there would be no evolution).

These are the issues we try to raise. They also entail a difference between causal replicability, and the statistical aspects of measurement and sampling (for which, in a sense, statistical testing was developed). To me, Pearl's approach is far too formalized to be of much reliability since we don't know when or to what extent it applies: how would we know? Typically, if not necessarily, it is by replication or some other subsequent test, but replication is not required of evolution, which is a process of differentiation.

Anyway, these are to some extent at least matters of one's outlook. To me, the cogent point is to make clear to those (even in biology and genetics) who don't think carefully, that promises of genomic 'precision' and 'personalized' genomic medicine are far more about marketing, in ways that would make PT Barnum proud, than they are about serious science. Biology and genetics work well in those causal situations where their (often implicit) assumptions are more or less met, since we may not care about the details. But much of this area is about very weak, very numerous, contemporary contributory factors, and there the methods do not work in ways that, one might say, can be proved or often even tested cogently.

Post a Comment